Alexa Espionage: Uncovering The Truth Through A Quick Network Analysis

TL;DR

- I analyzed the network traffic of 4 hours of Alexa traffic under four scenarios: two with annotated interactions and conversations, one with Alexa muted, and one with Alexa unmuted but isolated.

- Some anomalies were found and spikes in the traffic of some domains, especially Minerva Devices and Device Metrics during muted and non-triggered conversations.

- This indicates that Alexa might be using some local processing to detect keywords and send relevant conversations to Amazon.

- Outside of this theory, it does not appear that Alexa listens to non-woken conversations.

- More recordings with different topics and keywords are needed to test the hypothesis

Intro:

Something always appears to be creeping on conversations. It can sometimes be felt when you have a conversation, and without ever googling the topic, you suddenly see ads related to the product. Perhaps the Baader-Meinhof phenomenon is truly what’s happening, but there’s always a chance it isn’t – given how sketchy a lot of aspects of social media are. So, I decided to take a behavioral approach to the Alexa, and see if it’s doing anything when it isn’t supposed to.

Background:

While Alexa’s traffic is encrypted, and multiple people have done various studies of Alexa’s traffic. There are articles on people trying to get Alexa’s firmware, and a few public-facing pages that did analysis of Alexa’s traffic. Nothing public really satisfied my curiosity. The closest publication I could find that seemed to be similar to what I wanted to do was “Alexa, are you listening to me? An analysis of Alexa voice service network traffic” by Marcia Ford and William Palmer. But that is behind a paywall and so I don’t know what their conclusions were nor entirely their methods, just the freely available diagrams.

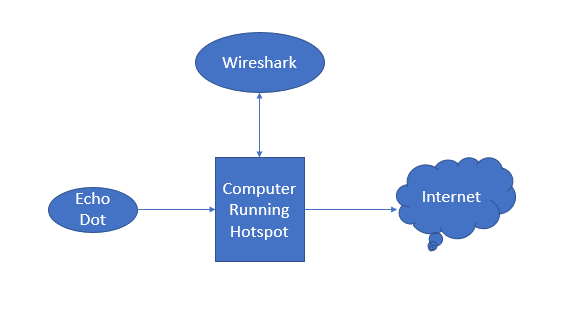

To start, my setup looks like this – I am testing with an Echo Dot with the latest firmware as of 3/14/2023 (8289070468):

One immediate caveat is that there’s obviously the potential for dropped packets due to this being over a hotspot instead of direct ethernet connection.

Assumptions (aka not necessarily true, but I cannot prove one way or another):

- If Alexa is listening and sending the recordings without wake word back to Amazon, it isn’t doing this via a delayed batch (e.g. record x hours of conversations and then send the entire thing over).

- Client Hello and Server Hello domains are not misrepresenting intended purposes (more on this later)

- Alexa is not covertly padding heartbeat traffic to disguise audio uploads

With this in mind, the following scenarios were recorded:

- Two 1-hour recordings with annotated timestamps of when Alexa was interacted with and when conversations took place near the Alexa

- One 1-hour recording with Alexa muted

- One 1-hour recording with Alexa unmuted and in a completely separate room that nobody would interact with it and it wouldn’t pick up accidentally.

Loading the Data

Now that I have the recordings, next was to load them somewhere to analyze. It’s the easiest for me to use Python and Pandas to look at the data, so that’s what I am using. I load the PCAP file from Wireshark and just export the fields I find useful to a CSV for loading into Pandas. The columns I used are the following:

- Time

- Source

- Source Port

- Destination

- Destination Port

- Protocol

- Length

- Info Field

- Server Name

- Server Name Type

- Host

Using the Client and Server Hellos you can identify most of the traffic and where it is going (this is where the client hello assumption comes into play). Essentially, it appears like there are 7 primary domains that Alexa talks to, I also explicitly label the IP address associated with handling Alexa questions as a separate label – so that brings us to 8:

- XXXXX .us-east-1.prod.service.minerva.devices.a2z.com’

- Not sure what this is for. Google doesn’t really say much other than the Minerva Website https://www.minervanetworks.com/company-overview/#title1 – this might be related to some TV on my network or something. I suspect it’s related to the Samsung Frame TV since, despite me never connecting it to Alexa it shows up as a suggested device to integrate.

- This will show up in charts as “Alexa Minerva Devices”

- web.diagnostic.networking.aws.dev

- Pretty straight forward, supposedly the diagnostic server

- Shows up in charts as “Alexa Diagnostics”

- api.amazonalexa.com

- Alexa API – that’s also how it shows up in the charts

- api.amazon.com

- This one was odd, only showed up once during the 4 hours of recordings.

- Displayed as “Amazon API”

- device-metrics-us-2.amazon.com

- Metrics, my guess is usage statistics but who knows

- Displayed as “Alexa Device Metrics”

- device-messaging-na.amazon.com

- Not sure what for

- Displayed as “Alexa Device Messaging”

- dss-na.amazon.com

- No idea what this is

- Displayed as “Amazon DSS-NA”

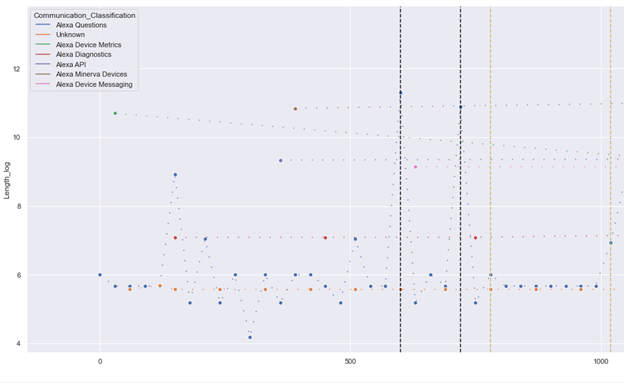

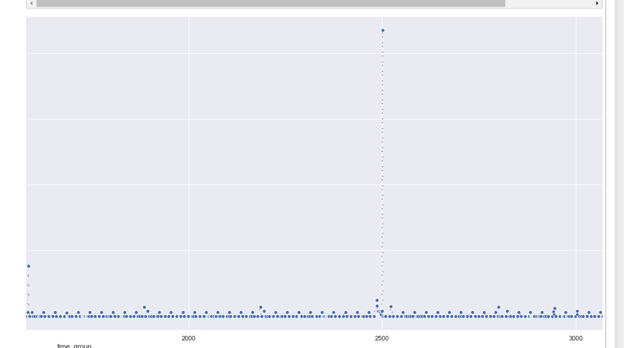

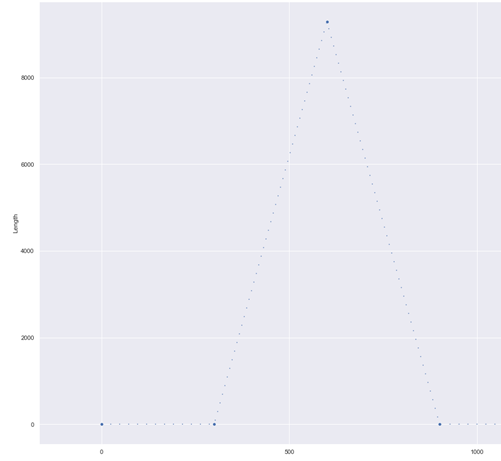

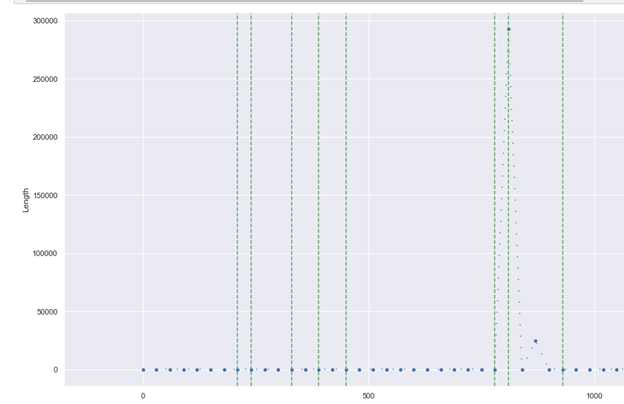

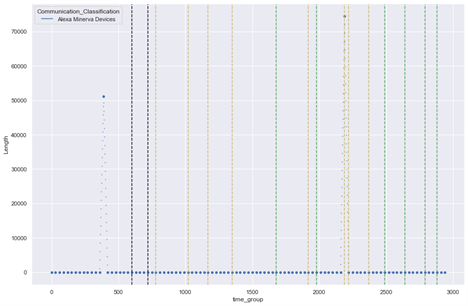

At the start, here’s what I get:

I removed all local connections and multi-cast connections for this as they shouldn’t be related.

The Y axis is logarithmic since there’s random spikes and Alexa Device Metrics constantly uses more data than the rest.

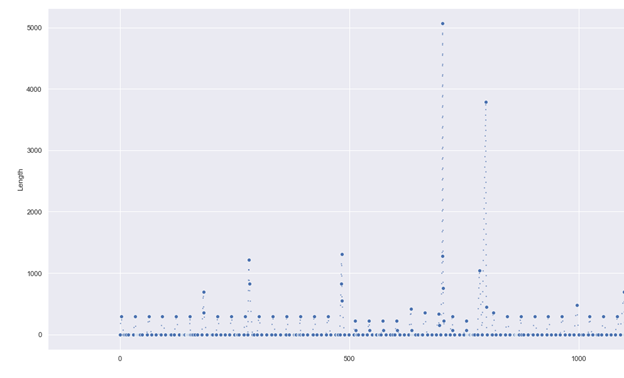

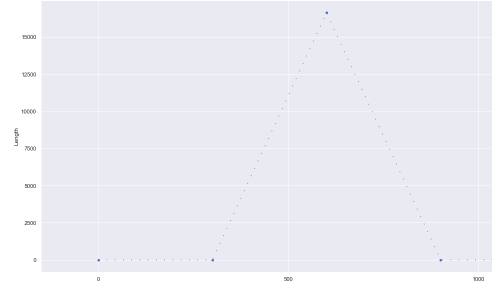

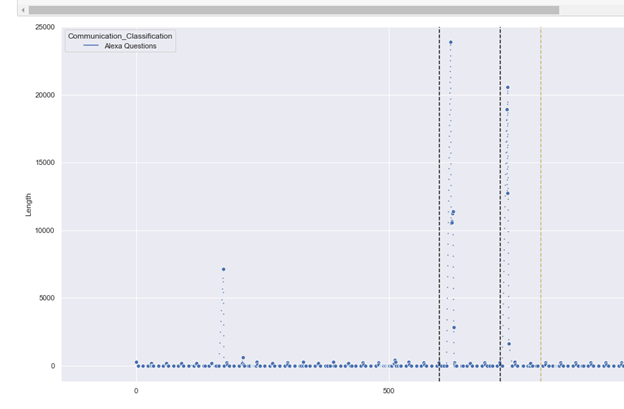

Let’s look at a 10 minute snapshot of one of the recordings where I explicitly made Alexa calls:

The black vertical lines are when Alexa was explicitly called. Yellow is when there’s very short conversations or where there’s only one side of the conversation. Green vertical lines (not shown) indicate full-blown conversations such as the below:

The very small dots are where there is no data. The larger circles are the actual data points for packets.

Immediately, the interesting thing to me is that the “Alexa Questions” server basically has a constant stream, this looks to mainly be due to a 30 second heartbeat, this heartbeat is almost always the exact same size too, except when there’s questions.

There’s obviously a little bit of variance, mainly due to it not being exactly 30 seconds all the time so sometimes the heartbeats are slightly quicker or slower which causes the graph to combine an extra handshake in one bucket instead of the one after it.

But this is great because we can take the fully muted data and use that to create a full profile of the length of Alexa Questions and if anything deviates when there are no questions asked this should make any kind of snooping to be blatantly obvious.

Let’s ignore this dataset for now and move to the muted Alexa conversation so we can create some very true, untouched, baselines.

Muted Alexa:

Except for the very start of network communications, muted Alexa pretty much is slightly above zero at all times with small bursts to 5,000 – 10,000 bytes. Although at the 41 minute mark the “Question Server” switches IPs which causes a new burst similar to the very start:

Let’s contrast this with the Alexa that is unmuted with zero questions:

It should be noted that I did not restart or re-connect the echo after unmuting, so there is no initialization spike. But the traffic seems to be going as expected, between 0 and 5000 bytes throughout:

The one domain that is pretty frequently talked about online is the “device metrics” which some have speculated is where the data collection process is. Instead of just looking at that one domain, let’s calculate the baseline when muted for each domain along with standard deviations per 30 second interval (since the heartbeats are usually 30 seconds) and see if any significantly deviate between unmuted and muted, and then compare that to the two times where there were known conversations and Alexa calls.

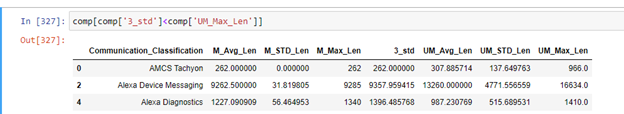

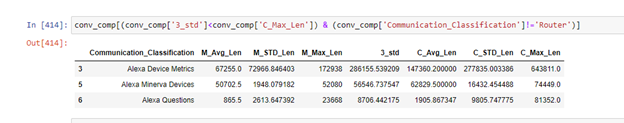

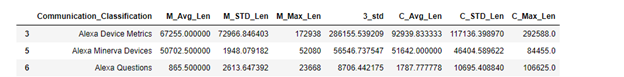

After computing the Average, Standard Deviation for muted and the Max when it was unmuted, there were only 3 domains that stood out:

I have zero idea what AMCS Tachyon is, although this website says it’s for Drop-In which is enabled on my Echo, so I will assume that’s true. Additionally, the max length is not significantly different from the average, and since there was only 1 event the standard deviation is zero. Going to move on from this one.

Alexa Diagnostics, same thing, barely above 3 standard deviations.

Alexa Device Messaging – this one is interesting. Both charts basically show the same way – two bursts of data – identical in nature. But the data size is different:

Burst When Muted

Burst When Unmuted

The unmuted burst is nearly twice the size of the muted burst.

Theory as I was doing this: the more we talk, the more the bursts are. Let’s look at one of the times when we had a multitude of conversations and asked Alexa questions.

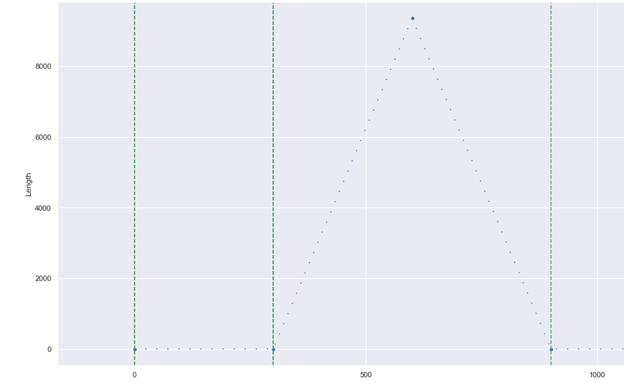

Oddly enough, both of the recordings that had direct questions and conversations, the bursts were the exact same size as the muted Alexa:

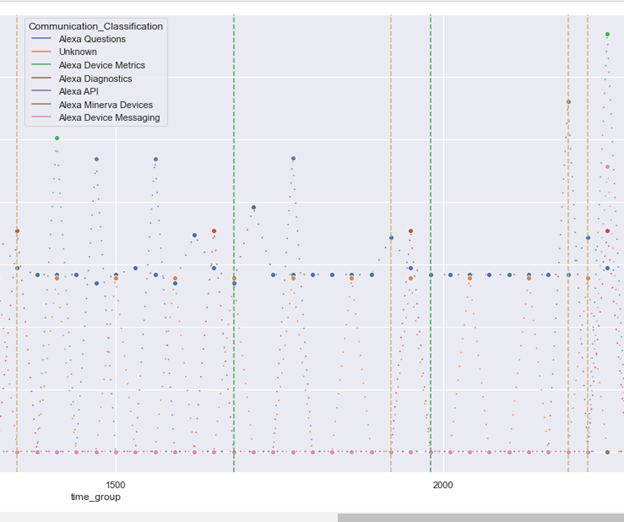

The green vertical lines represent points in time where there were conversations near the echo device. Additionally, after asking Alexa Questions, the Device Messaging also did not change:

Black lines represent asking Alexa questions and yellow lines represent single-sided conversations (e.g. responding to somebody not in the room).

Okay, so unmuted and muted conversations are essentially identical. What about when compared to active conversations and asking Alexa questions? We know that the “Device Messaging” domain didn’t change at the above, but what about the rest?

Before I even look at it, I am guessing that the Alexa Questions server will be well above the baseline due to asking it questions. Other than that, I have no idea.

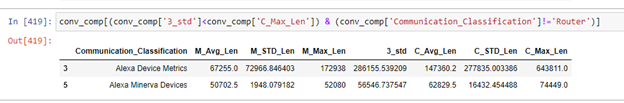

Okay so – Alexa Questions, as suspected, is significantly higher. Outside of this, we also have Device Metrics and whatever Minerva Devices are. I still want to check an assumption. If I remove the data where I asked Alexa questions, both of those should go back into being baseline level data transfer because it should be the equivalent of not asking questions.

Removed the timestamps – and viola!

So that’s good news and should be expected behavior. Now the questions only remain with Device Metrics and Minerva Devices.

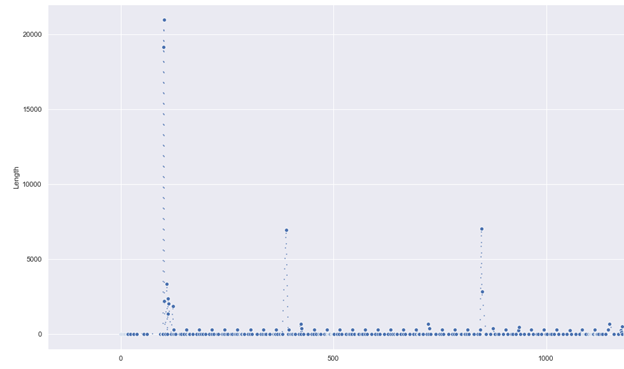

If I am to look at the muted behavior of Device Metrics I get something like this:

Except for the massive spike, all the other spikes are well within normal. It is curious as to why there is such a large spike for device metrics at a time where Alexa wasn’t even being asked questions.

If I compare this to the second recording with marked conversations, the largest spike happens right after two conversations, which could be coincidence. This will need to be evaluated further with more recordings – specifically the one thought I have as a possibility (if we are to assume Alexa is transcribing and sending conversational transcripts) is that the conversation topic triggers whether Alexa sends it back.

The second recording has a similar setup too with the same 3 domains being higher:

However, the spike is almost the exact same bytes as the muted Alexa for Device Metrics. Still something to explore is if keywords have an affect of any of the data transfer rates, but at the moment it doesn’t seem like it.

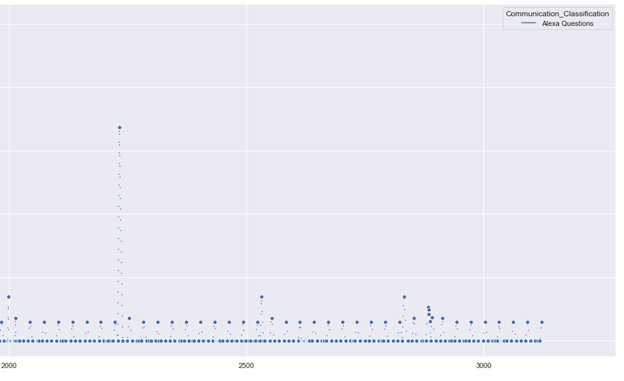

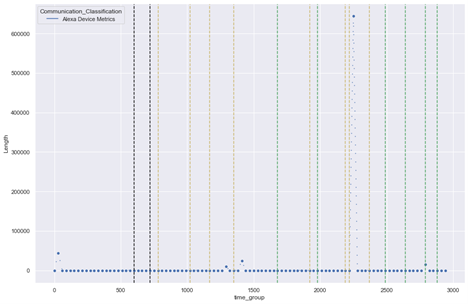

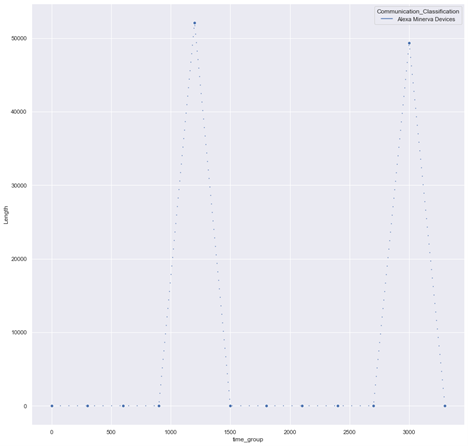

The only outside of baseline data is to this Minerva Devices domain (it is specifically always A1RABVCI4QCIKC.us-east-1.prod.service.minerva.devices.a2z.com). The only thing I can find about Minerva is that it has to do with IPTV – strange since nothing in the entire house during any 4 of these recordings used a TV.

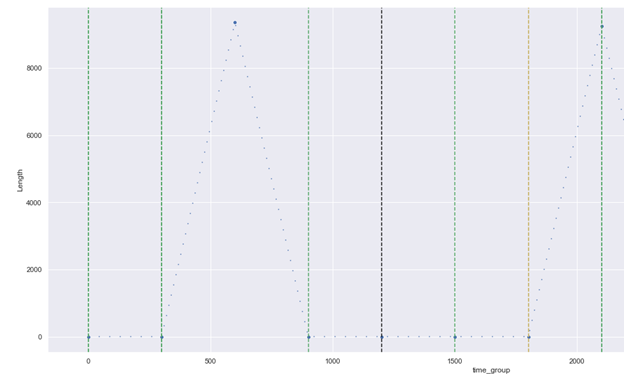

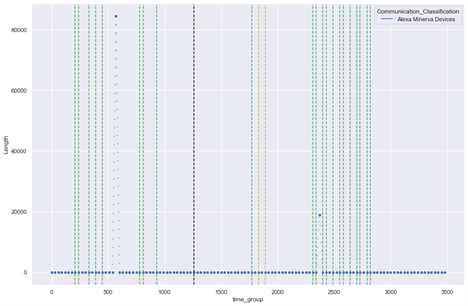

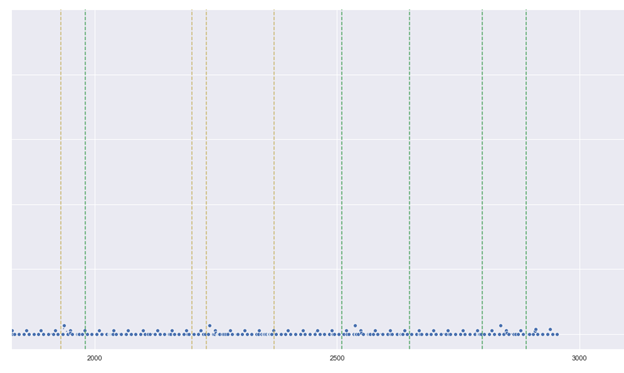

Lets look at all 4:

Muted:

Unmuted:

Conversation 1:

Conversation 2:

This is odd because the only thing that seems to correlate is nearby conversations. This will require more recordings to test.

So, the interesting aspect is that while there were zero commands, I did not stop conversations from happening while unmuted. The two blips at the very end are pretty well in-line when two conversations actually did occur.

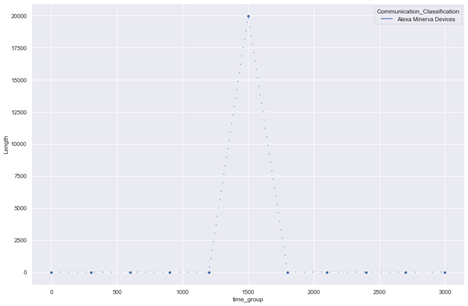

Of course, the length of these packets are only about 16,000 bytes so I am sure some people are saying to themselves “that’s not really enough data”. Well, let’s look back when I directly asked it questions.

Peaks at around 20,000 – 24,000 bytes per question. Given that none of my Echo devices are new enough to process locally – this means that either 1) Echo devices do actually process voice locally or 2) the voice recordings are super compressed and then sent to Amazon. I will go with option 2 given that they are selling local voice processing as a new feature – which still means that there was something odd during the no-wake recording around the time the conversations happened. I unfortunately forgot to mark the exact timestamp of the conversations, but the second conversation was literally right before I turned the recording off.

That said, other points of time during the marked conversation do not fit this bill:

There are a multitude of conversations here, with none of them causing a spike in outbound data. But for the moment, let’s assume that the spike during the non-woken session wasn’t a fluke – how else could we explain the differences?

- There is some local processing, and it looks for “data words” to only send useful conversations.

- Technically – there does have to be some kind of local processing in order for the wake word to work

- This is something I want to test when I get the time

We already know that this is the case with the words “Alexa”, “Computer” and “Echo” – so why wouldn’t other wake words be possible (e.g. “Buy”, “purchase”, “online”)? This would also be beneficial for Amazon to do, given the amount of research showing that voice transcripts allow Amazon to infer advertising interests and therefore make more money.